UCSD CSE 231 (Sp24)

Crew

- Ranjit Jhala (Instructor)

- Nico Lehmann (TA, 🦀)

- Matthew Kolosick (TA)

- Michael Borkowski (TA)

(with many thanks to Joe Politz from whom much of this material is gratefully borrowed!)

Basics - Resources - Schedule - Staff - Grading - Policies

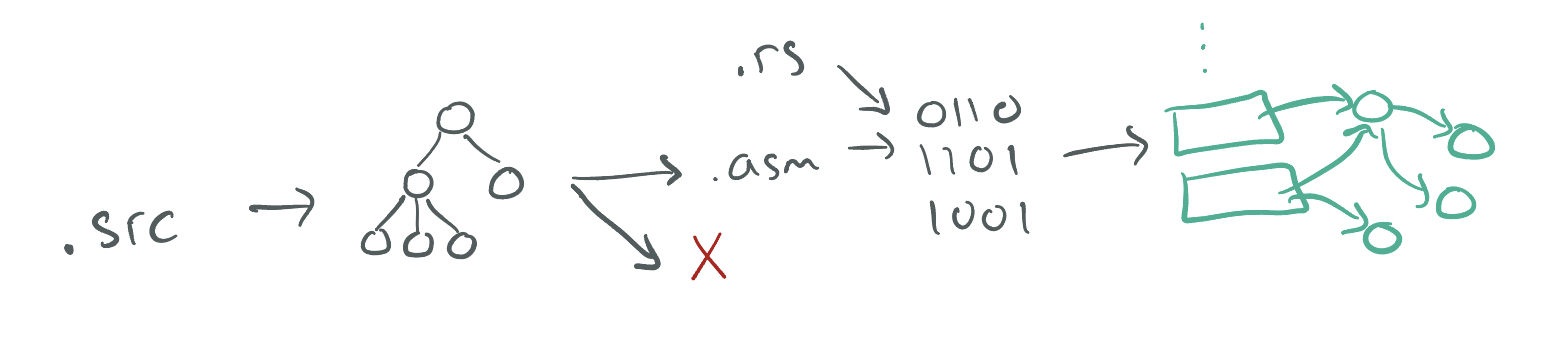

In this course, we'll explore the implementation of compilers: programs that transform source programs into other useful, executable forms. This will include understanding syntax and its structure, checking for and representing errors in programs, writing programs that generate code, and the interaction of generated code with a runtime system.

We will explore these topics interactively in lecure, you will implement an increasingly sophisticated series of compilers throughout the course to learn how different language features are compiled, and you will think through design challenges based on what you learn from implementation.

This web page serves as the main source of announcements and resources for the course, as well as the syllabus.

Basics

- Lecture: Center 105 Tu-Th 2:00-3:20pm

- Discussion: CENTER 212 Fr 2:00-2:50pm

- Exams: (In Friday Discussion Section) May 3 (Week 5), May 31 (Week 9)

- Final Exam: (Optional, to make up exam credit) Tue June 11, 3:00-6:00pm (CENTER 105)

- Podcasts: podcast.ucsd.edu

- Q&A Forum: Piazza

- Gradescope: https://www.gradescope.com

Office Hours

- Ranjit (Thu 1pm - 2pm in CSE 3110)

- Michael (Fri 4pm - 5pm in CSE 3217)

- Nico (Thu 10am-11am https://ucsd.zoom.us/j/98350873892, Fri 10am-11am CSE 240A)

- Matt (Wed 10am-12pm https://ucsd.zoom.us/j/2499498988)

Resources

Textbook/readings: There's no official textbook, but we will link to different online resources for you to read to supplement lecture. Versions of this course have been taught at several universities, so sometimes I'll link to those instructors' materials as well.

Some useful resources are:

- The Rust Book (also with embedded quizzes)

- An Incremental Approach to Compiler Construction

- UMich EECS483

- Northeastern CS4410

Schedule

The schedule below outlines topics, due dates, and links to assignments. The schedule of lecture topics might change slightly, but I post a general plan so you can know roughly where we are headed.

The typical due dates are that assignments and quizzes are due Friday evenings.

Week 10 - Register Allocation

-

Assignment

harlequin(Type Inference) due 6/7 github classroom -

Handouts

- Thursday Handout: (pdf)

Week 9 - GC / Type Inference

-

Handouts

-

Reading and Resources

Week 8 - GC / Type Inference

-

Handouts

Week 7 - Garbage Collection

-

Handouts:

-

Reading and Resources

Week 6 - Closures

- Handouts:

- Reading and Resources

Week 5 - Tail Calls and the Heap

-

Handouts

- Tuesday Handout: (pdf), (annot-pdf)

- Thursday Handout: (pdf)

-

Reading and Resources

Week 4 - Functions and Calling Conventions

-

Handouts and recordings:

-

Reading and Resources:

Week 3 - Tags, Conditionals, and Loops

-

Handouts:

- Thu Handout: (pdf)

-

Reading and resources:

- Memory Representation of Values in Ocaml More discussion of a language with tagged value representations (and Ocaml is type-checked!).

- V8 Blog Post Including Number Representations This goes a little further than we are right now, but focus on the fact that V8, one of the widely deployed JS engines, uses tag bits for its numbers.

Week 2 - Binary Operators, Booleans, and Conditionals

- Assignment (due Friday, April 12, 11:59pm)

- Assignment on Github Classroom

- Handouts:

- Reading and resources:

- Max New on Let and the Stack Max New and Ben Lerner have done a nice job writing up notes on let-bindings and the stack. They don't use exactly the same style or make the same decisions as CSE231, but things are close enough to be useful.

Week 1 - Rust and Source to Assembly Conversion

- Assignment (due Friday, April 5, 23:59:59)

- Assignment on Github Classroom

- Reading and resources:

Staff

Office hours are concentrated on Wed, Thu, Fri, since most assignments are due Friday evening. Please check the calendar before you come in case there have been any changes. When you come to the office hour, we may ask you to put your name in the queue using the whiteboard. Read the description about collaboration below for some context about office hours. The office hours schedule is below; each event has details about remote/in-person:

Grading

Your grade will be calculated from assignments, exams, participation and quizzes.

Assignments are given periodically, typically at one or two week intervals. On each you'll get a score from 0-3 (Incomplete/No Pass, Low Pass, Pass, High Pass).

There are two exams in the course, one in week 5 and one in week 9, given in the Friday discussion sections. Tests also get a score from 0-3. Finals week and the usual final exam block will give an opportunity to make up credit on these if you miss them or get low scores.

For the participation credit, most lectures will come with a 1-2 page handout, and you can submit the handout any time up until the start of the next lecture. Credit is given for reasonable effort in engaging with the notes from the day on the handout.

Quizzes will release each week around Wednesday, and be due Sunday evening. These serve as a review of the past week and a preview of the coming week.

The standards for grade levels are:

-

A:

- Exam point total 5 or higher (High Pass on one exam and Pass or better on the other) (including final make-up)

- One of:

- High Pass on half the assignments, Pass on others, no missing assignments

- High Pass on 4 of 5 assignments from (

diamondback,egg-eater,fer-de-lance,gardener,harlequin) assignments. - High Pass on 3 of 5 assignments from (

diamondback,egg-eater,fer-de-lance,gardener,harlequin) and High Pass in both midterms.

-

B:

- Exam point total 4 or higher (one High Pass and one Low Pass, or two Passes) (including final make-up)

- One of:

- Pass or above on all assignments, up to one missing assignment

- High pass on one assignment from (

boa,cobra,diamondback) and High pass on two assignments from (egg-eater,fer-de-lance,gardener,harlequin).

-

C

- Exam point total 3 or higher (including final make-up)

- Pass or above on half the assignments, any score on the others

You get credit for a quiz by getting most of the questions right.

Engagement is used to add +/- modifiers at the end of the quarter, and won't make the difference between A/B/C etc.

Comprehensive Exam: For graduate students using this course for a comprehensive exam requirement, you must get "A" achievement on the exams. Note that you can use the final exam make-up time to do this!

Policies

Programming

In your professional programming life, some of your work will be highly collaborative with lots of expert advice available from senior developers and from sites like StackOverflow. This is a common case in many Web-focused companies, in academia, and on open-source projects. It’s a great way to get exposed to new techniques, share knowledge, and generally enjoy teamwork. In contrast, some of your work will involve figuring out programming problems on your own, where you are the first person to encounter an issue, or the first person to try using a new library in the context of your application. You should get experience in both types of situations; we might call the former kind of process open to collaboration and the latter closed to collaboration.

In terms of courses, this split also makes sense. Programming assignments serve (at least) two roles. First and foremost, they are a mechanism for you to learn! By directly applying the techniques and skills we discuss in class, you get practice and become a better programmer. Second, they are an assessment mechanism – as instructional staff we use them to evaluate your understanding of concepts as demonstrated by your programs. Open collaboration can reduce frustration while learning and give you chances to enjoy collaboration and lots of help, but may not let us accurately evaluate your understanding. Closed assignments are an opportunity for you to demonstrate what you know by way of programming (and some of the frustration of working through a problem on your own is healthy frustration).

There are two types of assignments in this course:

-

Open collaboration assignments, for which you can talk to anyone else in the course, post snippets of code online, get lots of help from TAs, and generally come up with solutions collaboratively. TAs will be happy to look at your code and suggest fixes, along with explaining them. There are a few restrictions:

- Any code that you didn't write must be cited in the README file that goes

along with your submission

- Example: On an open collaboration assignment, you and another student chat online about the solution, you figure out a particular helper method together. Your README should say “The FOO function was developed in collaboration with Firstname Lastname”

- Example: On an open collaboration assignment, a student posts the compilation strategy they used to handle a type of expression you were struggling with. Your README should say “I used the code from the forum post at [link]”

- Anyone you work with in-person must be noted in your README

- Example: You and another student sit next to each other in the lab, and point out mistakes and errors to one another as you work through the assignment. As a result, your solutions are substantially similar. Your README should say “I collaborated with Firstname Lastname to develop my solution.”

- You cannot share publicly your entire repository of code or paste an entire solution into a message board. Keep snippets to reasonable, descriptive chunks of code; think a dozen lines or so to get the point across.

- You still cannot use whole solutions that you find online (though copy-paste from Stack Overflow, tutorials etc, if you need help with Rust patterns, etc.) You shouldn't get assistance or code from students outside of this offering of the class. All the code that is handed in should be developed by you or someone in the class.

- If you can get ChatGPT, Copilot, or another LLM to generate code that works for the course, feel free, but you must put comments in your code describing the prompt you used to get it if you do. If you have Copilot on, put a comment if it generates an entire method or match case.

This doesn’t mean the staff will be handing out answers. We’ll mostly respond with leading questions and advice, and you shouldn’t expect a direct answer to questions like “am I done?” or “is my code right?”

There is no guarantee the assistance you get from your classmates is correct. It is your responsibility to use your judgment to avoid using an idea on the course message board that is wrong, or doesn’t work with your solution; we won’t necessarily tell you one way or another while the assignment is out.

If we see that you used code from other students and didn’t cite it in the README, the penalty will range from a point deduction to an academic integrity violation, depending on the severity. Always cite your work!

- Any code that you didn't write must be cited in the README file that goes

along with your submission

-

Closed collaboration assignments, where you cannot collaborate with others. You can ask clarification questions as private posts or of TAs. However, TAs will not look at your code or comment on it. Lab/office hours these weeks are for conceptual questions or for questions about past assignments only, no code assistance. On these assignments:

- You cannot look at or use anyone else's code for the assignment

- You cannot discuss the assignment with other students

- You cannot post publicly about the assignment on the course message board (or on social media or other forums). Of course, you can still post questions about material from lecture or past assignments!

- All of the examples in the open collaboration section above would be academic integrity violations on a closed collaboration assignment except for using tutorials/LLMs. If you use code from tutorials/Stack Overflow/LLMs, cite them as described above.

You can always use code from class or shared by the instructional team (properly attributed).

Programming assignments will explicitly list whether they are open or closed collaboration.

You should be familiar with the UCSD guidelines on academic integrity as well.

Late Work

You have a total of six late days that you can use throughout the quarter, but no more than four late days per assignment.

- A late day means anything between 1 second and 23 hours 59 minutes and 59 seconds past a deadline

- If you submit past the late day limit, you get 0 points for that assignment

- There is no penalty for submitting late but within the limit

Regrades

Mistakes occur in grading. Once grades are posted for an assignment, we will allow a short period for you to request a fix (announced along with grade release). If you don't make a request in the given period, the grade you were initially given is final.

Exams

There will be two exams during the quarter (held in discussion section) and a final exam. There are no make-up exams for the tests during the quarter. However, the final exam will have sections that correspond to each of the in-class exams, and if your score on that part of the final is higher than your score on that in-class exam, the exam score replaces it. This includes the case where you miss an in-class exam (scoring a 0), but can regain credit from that part of the final exam. This policy is designed to encourage you to treat the in-class exams as learning opportunities so that you can study any mistakes you make and re-apply that knowledge on the final.

In addition, if you score high enough on the exams during the quarter, you can skip the final exam with no penalty and just have the exam grades applied as your exam score.

You are not allowed any study aids on exams, aside from those pertaining to university-approved accommodations. References will be provided along with exams to avoid unnecessary memorization.

You cannot discuss the content of exams with others in the course until grades have been released for that exam.

Some past exams are available at the link below for reference on format (content changes from offering to offering so this may not be representative):

Laptop/Device Policy in Lecture

There are lots of great reasons to have a laptop, tablet, or phone open during class. You might be taking notes, getting a photo of an important moment on the board, trying out a program that we're developing together, and so on. The main issue with screens and technology in the classroom isn't your own distraction (which is your responsibility to manage), it's the distraction of other students. Anyone sitting behind you cannot help but have your screen in their field of view. Having distracting content on your screen can really harm their learning experience.

With this in mind, the device policy for the course is that if you have a screen open, you either:

- Have only content onscreen that's directly related to the current lecture.

- Have unrelated content open and sit in one of the back two rows of the room to mitigate the effects on other students. I may remind you of this policy if I notice you not following it in class. Note that I really don't mind if you want to sit in the back and try to multi-task in various ways while participating in lecture (I may not recommend it, but it's your time!)

Diversity and Inclusion

We are committed to fostering a learning environment for this course that supports a diversity of thoughts, perspectives and experiences, and respects your identities (including race, ethnicity, heritage, gender, sex, class, sexuality, religion, ability, age, educational background, etc.). Our goal is to create a diverse and inclusive learning environment where all students feel comfortable and can thrive.

Our instructional staff will make a concerted effort to be welcoming and inclusive to the wide diversity of students in this course. If there is a way we can make you feel more included please let one of the course staff know, either in person, via email/discussion board, or even in a note under the door. Our learning about diverse perspectives and identities is an ongoing process, and we welcome your perspectives and input.

We also expect that you, as a student in this course, will honor and respect your classmates, abiding by the UCSD Principles of Community (https://ucsd.edu/about/principles.html). Please understand that others’ backgrounds, perspectives and experiences may be different than your own, and help us to build an environment where everyone is respected and feels comfortable.

If you experience any sort of harassment or discrimination, please contact the instructor as soon as possible. If you prefer to speak with someone outside of the course, please contact the Office of Prevention of Harassment and Discrimination: https://ophd.ucsd.edu/.

Adder

In this assignment you'll implement a compiler for a small language called

Adder, that supports 32-bit integers and three operations – add1, sub1,

and negate. There is no starter code for this assignment; you'll do it from

scratch based on the instructions here.

Setup

You can start by

- accepting the assignment on github, and then

- opening the assignment CodeSpaces

The necessary tools will be present in the CodeSpace.

You may also want to work on your own computer, in which case you'll need to install rust and cargo

https://www.rust-lang.org/tools/install

You may also (depending on your system) need to install nasm.

On my mac I used brew install nasm; on other systems your package manager of choice likely

has a version. On Windows you should use Windows Subsystem for Linux (WSL)

The assignments assume that your computer can build and run x86-64-bit binaries. This is true of most (but not all) mass-market Windows and Linux laptops. Newer Macs use a different ARM architecture, but can also run legacy x86-64-bit binaries, so those are fine as well. You should ensure that whatever you do to build your compiler also runs on the github codespace, standard environment for testing your work.

Rust 101

The first few sections of the Rust Book walk you through installing Rust, as well. We'll assume you've gone through the “Programming a Guessing Game” chapter of that book before you go on, so writing and running a Rust program isn't too weird to you.

Implementing a Compiler for Numbers

We're going to start by just compiling numbers, so we can see how all the infrastructure works. We won't give starter code for this so that you see how to build this up from scratch.

By just compiling numbers, we mean that we'll write a compiler for a language where the “program” file just contains a single number, rather than full-fledged programs with function definitions, loops, and so on. (We'll get there!)

Creating a Project

First, make a new project with

$ cargo new adder

This creates a new directory, called adder, set up to be a Rust project.

The main entry point is in src/main.rs, which is where we'll develop the

compiler. There's also a file called Cargo.toml that we'll use in a little

bit, and a few other directories related to building that we won't be too

concerned with in this assignment.

The Runner

We'll start by just focusing on numbers.

It's useful to set up the goal of our compiler, which we'll come back to repeatedly in this course:

“Compiling” an expression means generating assembly instructions that evaluate it and leave the answer in the

raxregister.

Given this, before writing the compiler, it's useful to spend some time thinking about how we'll run these assembly programs we're going to generate. That is, what commands do we run at the command line in order to get from our soon-to-be-generated assembly to a running program?

We're going to use a little Rust program to kick things off. It will look like

this; you can put this into a file called runtime/start.rs:

#[link(name = "our_code")]

extern "C" {

// The \x01 here is an undocumented feature of LLVM (which Rust uses) that ensures

// it does not add an underscore in front of the name, which happens on OSX

// Courtesy of Max New

// (https://maxsnew.com/teaching/eecs-483-fa22/hw_adder_assignment.html)

#[link_name = "\x01our_code_starts_here"]

fn our_code_starts_here() -> i64;

}

fn main() {

let i : i64 = unsafe {

our_code_starts_here()

};

println!("{i}");

}

This file says:

- We're expecting there to be a precompiled file called

libour_codethat we can load and link against (we'll make it in a few steps) - That file should define a global function called

our_code_starts_here. It takes no arguments and returns a 64-bit integer. - For the

mainof this Rust program, we will call theour_code_starts_herefunction in anunsafeblock. It has to be in anunsafeblock because Rust doesn't and cannot check thatour_code_starts_hereactually takes no arguments and returns an integer; it's trusting us, the programmer, to ensure that, which isunsafefrom its point of view. Theunsafeblock lets us do some kinds of operations that would otherwise be compile errors in Rust. - Then, print the result.

Let's next see how to build a libour_code file out of some x86-64 assembly

that will work with this file. Here's a simple assembly program that has a

global label for our_code_starts_here that has a “function body” that

returns the value 31:

section .text

global our_code_starts_here

our_code_starts_here:

mov rax, 31

ret

Put this into a file called test/31.s if you like, to test things out (you

should now have a runtime/ and a test/ directory that you created).

We can create a standalone binary program that combines these with these

commands (substitute macho64 for elf64 on OSX and if you're on an M1/M2

machine change the invocation to rustc --target x86_64-apple-darwin ....

You may have to run rustup target add x86_64-apple-darwin):

$ nasm -f elf64 test/31.s -o runtime/our_code.o

$ ar rcs runtime/libour_code.a runtime/our_code.o

$ ls runtime

libour_code.a our_code.o start.rs

$ rustc -L runtime/ runtime/start.rs -o test/31.run

$ ./test/31.run

31

The first command assembles the assembly code to an object file. The basic

work there is generating the machine instructions for each assembly

instruction, and enough information about labels like our_code_starts_here to

do later linking. The ar command takes this object file and puts it in a

standard format for library linking used by #[link in Rust. Then

rustc combines that .a file and start.rs into a single executable binary

that we named 31.run.

We haven't written a compiler yet, but we do know how to go from files

containing assembly code to runnable binaries with the help of nasm and

rustc. Our next task is going to be writing a program that generates assembly

files like these.

Generating Assembly

Let's revisit our definition of compiling:

“Compiling” an expression means generating assembly instructions that evaluate it and leave the answer in the

raxregister.

Since, for now, our programs are going to be single expressions (in fact just

single numbers), this means that for a program like “5”, we want to generate

assembly instructions that put the constant 5 into rax.

Let's write a Rust function that does that, with a simple main function that

shows it working on a single hardcoded input; this goes in src/main.rs and is

the start of our compiler:

/// Compile a source program into a string of x86-64 assembly

fn compile(program: String) -> String {

let num = program.trim().parse::<i32>().unwrap();

return format!("mov rax, {}", num);

}

fn main() {

let program = "37";

let compiled = compile(String::from(program));

println!("{}", compiled);

}

You can compile and run this with cargo run:

$ cargo run

Compiling adder v0.1.0 ...

mov rax, 37

Really all I did here was look up the documentation in Rust about converting a

string to an integer and template the number into a mov command. The input

37 is hardcoded, and to use the output like we did above, we'd need to

copy-paste the mov command into a larger assembly file with

our_code_starts_here, and so on.

Here's a more sophisticated main that takes two command-line arguments: a

source file to read and a target file to write the resulting assembly to. It

also puts the generated command into the template we designed for our generated

assembly:

use std::env;

use std::fs::File;

use std::io::prelude::*;

fn main() -> std::io::Result<()> {

let args: Vec<String> = env::args().collect();

let in_name = &args[1];

let out_name = &args[2];

let mut in_file = File::open(in_name)?;

let mut in_contents = String::new();

in_file.read_to_string(&mut in_contents)?;

let result = compile(in_contents);

let asm_program = format!("

section .text

global our_code_starts_here

our_code_starts_here:

{}

ret

", result);

let mut out_file = File::create(out_name)?;

out_file.write_all(asm_program.as_bytes())?;

Ok(())

}

Since this now expects files rather than hardcoded input, let's make a test

file in test/37.snek that just contains 37 as contents. Then we'll read the

“program” (still just a number) from 37.snek and store the resulting

assembly in 37.s. (snek is a silly spelling of snake, which is a theme of

the languages in this course.)

Then we can run our compiler with these command line arguments:

$ cat test/37.snek

37

$ cargo run -- test/37.snek test/37.s

$ cat test/37.s

section .text

global our_code_starts_here

our_code_starts_here:

mov rax, 37

ret

Then we can use the same sequence of commands from before to run the program:

$ nasm -f elf64 test/37.s -o runtime/our_code.o

$ ar rcs runtime/libour_code.a runtime/our_code.o

$ rustc -L runtime/ runtime/start.rs -o test/37.run

$ ./test/37.run

37

We're close to saying we've credibly built a “compiler”, in that we've taken some source program and gone all the way to a generated binary.

The next steps will be to clean up the clumsiness of running 3 post-processing

commands (nasm, ar, and rustc), and then adding some nontrivial

functionality.

Cleaning up with a Makefile

There are a lot of thing we could do to try and assemble and run the program,

and we'll discuss some later in the course. For now, we'll simply tidy up our

workflow by creating a Makefile that runs through the compile-assemble-link

steps for us. Put these rules into a file called Makefile in the root of the

repository (use elf64 on Linux):

test/%.s: test/%.snek src/main.rs

cargo run -- $< test/$*.s

test/%.run: test/%.s runtime/start.rs

nasm -f elf64 test/$*.s -o runtime/our_code.o

ar rcs runtime/libour_code.a runtime/our_code.o

rustc -L runtime/ runtime/start.rs -o test/$*.run

Note: on MACOS

- Write

macho64instead ofelf64and - Write

rustc --target x86_64-apple-darwin ...(if you have an M1/M2 machine)

(Note that make requires tabs not spaces, but we can only use spaces on the

website, so please replace the four spaces indentation with tab characters when

you copy it.)

And then you can run just make test/<file>.run to do the build steps:

$ make test/37.run

cargo run -- test/37.snek test/37.s

Finished dev [unoptimized + debuginfo] target(s) in 0.07s

Running `target/x86_64-apple-darwin/debug/adder test/37.snek test/37.s`

nasm -f macho64 test/37.s -o runtime/our_code.o

ar rcs runtime/libour_code.a runtime/our_code.o

rustc -L runtime/ runtime/start.rs -o test/37.run

The cargo run command will re-run if the .snek file or the compiler

(src/main.rs) change, and the assemble-and-link commands will re-run if the

assembly (.s file) or the runtime (runtime/start.rs) change.

The Adder Language

In each of the next several assignments, we'll introduce a language that we'll implement. We'll start small, and build up features incrementally. We're starting with Adder, which has just a few features –numbers and three operations.

There are a few pieces that go into defining a language for us to compile:

- A description of the concrete syntax – the text the programmer writes.

- A description of the abstract syntax – how to express what the programmer wrote in a data structure our compiler uses.

- A description of the behavior of the abstract syntax, so our compiler knows what the code it generates should do.

Concrete Syntax

The concrete syntax of Adder is:

<expr> :=

| <number>

| (add1 <expr>)

| (sub1 <expr>)

| (negate <expr>)

Abstract Syntax

The abstract syntax of Adder is a Rust datatype, and corresponds nearly

one-to-one with the concrete syntax. We'll show just the parts for add1 and

sub1 in this tutorial, and leave it up to you to include negate to get

practice.

enum Expr {

Num(i32),

Add1(Box<Expr>),

Sub1(Box<Expr>)

}

The Box type is necessary in Rust to create recursive types like these (see

Enabling Recursive Types with Boxes).

If you're familiar with C, it serves roughly the same role as introducing a

pointer type in a struct field to allow recursive fields in structs.

The reason this is necessary is that the Rust compiler calculates a size and

tracks the contents of each field in each variant of the enum. Since an

Expr could be an Add1 that contains another Add1 that contains another

Add1... and so on, there's no way to calculate the size of an enum variant like

Add1(Expr)

(What error do you get if you try?)

Values of the Box type always have the size of a single reference (probably

represented as a 64-bit address on the systems we're using). The address will

refer to an Expr that has already been allocated somewhere. Box is one of

several smart pointer types whose memory are carefully, and automatically,

memory-managed by Rust.

Semantics

A ``semantics'' describes the languages' behavior without giving all of the assembly code for each instruction.

An Adder program always evaluates to a single i32.

- Numbers evaluate to themselves (so a program just consisting of

Num(5)should evaluate to the integer5). add1andsub1expressions perform addition or subtraction by one on their argument.negateproduces the result of the argument multiplied by-1

There are several examples further down to make this concrete.

Here are some examples of Adder programs:

Example 1

Concrete Syntax

(add1 (sub1 5))

Abstract Syntax

Add1(Box::new(Sub1(Box::new(Num(5)))))

Result

5

Example 2

Concrete Syntax

4

Abstract Syntax

Num(4)

Result

4

Example 3

Concrete Syntax

(negate (add1 3))

Abstract Syntax

Negate(Box::new(Add1(Box::new(Num(3)))))

Result

-4

Implement an Interpreter for Adder

As a warm up exercise, implement an interpreter for Adder which is to say,

a plain rust function that evaluates the Expr datatype we defined above.

fn eval(e: &Expr) -> i32 {

match e {

Expr::Num(n) => ...,

Expr::Add1(e1) => ...,

Expr::Sub1(e1) => ...,

}

}

Write a few tests to convince yourself that your interpreter is working as expected.

In the file src/main.rs, you can add a #[cfg(test)] section to write tests

#[cfg(test)]

mod tests {

#[test]

fn test1() {

let expr1 = Expr::Num(10);

let result = eval(expr1);

assert_eq!(result, 10);

}

}

And then you can run the test either in vscode or by running cargo test in the terminal.

Implementing a Compiler for Adder

The overall syntax for the Adder language admits many more features than just

numbers. With the definition of Adder above, we can have programs like (add1 (sub1 6)), for example. There can be any numbers of layers of nesting of the

parentheses, which means we need to think about parsing a little bit more.

Parsing

We're going to design our syntax carefully to avoid thinking too much about parsing, though. The parenthesized style of Adder is a subset of what's called s-expressions. The Scheme and Lisp family of languages are some of the more famous examples of languages built in s-expressions, but recent ones like WebAssembly also use this syntax, and it's a common choice for language development to simplify decision around syntax, which can become quite tricky and won't be our focus in this course.

A grammar for s-expressions looks something like:

s-exp := number

| symbol

| string

| ( <s-exp>* )

That is, an s-expression is either a number, symbol (think of symbol like an identifier name), string, or a parenthesized sequence of s-expressions. Here are some s-expressions:

(1 2 3)

(a (b c d) e "f" "g")

(hash-table ("a" 100) ("b" 1000) ("c" 37"))

(define (factorial n)

(if (== n 1)

1

(factorial (* n (- n 1)))))

(class Point

(int x)

(int y))

(add1 (sub1 37))

One attractive feature of s-expressions is that most programming languages have

libraries for parsing them. There are several crates available for parsing

s-expressions in Rust. You're free to pick another one if you like it, but I'm

going to use sexp because its type definitions

work pretty well with pattern-matching and I find that helpful.

(lexpr also looks interesting, but the

Value type is really clumsy with pattern matching so it's not great for this

tutorial.)

Parsing with S-Expressions

We can add this package to our project by adding it to Cargo.toml, which was

created when you used cargo new. Make it so your Cargo.toml looks like this:

[package]

name = "adder"

version = "0.1.0"

edition = "2021"

[dependencies]

sexp = "1.1.4"

Then you can run cargo build and you should see stuff related to the sexp

crate be downloaded.

We can then use it in our program like this:

use sexp::*;

use sexp::Atom::*;

Then, a function call like this can turn a string into a Sexp:

let sexp = parse("(add1 (sub1 (add1 73)))").unwrap()

(As a reminder, the .unwrap() is our way of telling Rust that we are trusting

this parsing to succeed, and we'll panic! and stop the program if the parse

doesn't succeed. We will talk about giving better error messages in these cases

later.)

Our goal, though, is to use a datatype that we design for our expressions, which we introduced as:

enum Expr {

Num(i32),

Add1(Box<Expr>),

Sub1(Box<Expr>),

}

So we should next write a function that takes Sexps and turns them into

Exprs (or gives an error if we give an s-expression that doesn't match the

grammar of Adder). Here's a function that will do the trick:

fn parse_expr(s: &Sexp) -> Expr {

match s {

Sexp::Atom(I(n)) => Expr::Num(i32::try_from(*n).unwrap()),

Sexp::List(vec) => {

match &vec[..] {

[Sexp::Atom(S(op)), e] if op == "add1" => Expr::Add1(Box::new(parse_expr(e))),

[Sexp::Atom(S(op)), e] if op == "sub1" => Expr::Sub1(Box::new(parse_expr(e))),

_ => panic!("parse error"),

}

},

_ => panic!("parse error"),

}

}

(A Rust note – the parse_expr function takes a reference to Sexp (the type

&Sexp) which means parse_expr will have read-only, borrowed access to some

Sexp that was allocated and stored somewhere else.)

This uses Rust pattern matching to match the specific cases we care about

for Adder – plain numbers and lists of s-expressions. In the case of lists, we

match on two the two specific cases that look like add1 or sub1 followed by

some other s-expression. In those cases, we recursively parse, and use

Box::new to match the signature we set up in enum Expr.

Code Generation

So we've got a way to go from more structure text—s-expressions—stored in

files and produce our Expr structure. Now we just need to go from the Expr

ASTs to generated assembly. Here's one way to do that:

fn compile_expr(e: &Expr) -> String {

match e {

Expr::Num(n) => format!("mov rax, {}", *n),

Expr::Add1(subexpr) => compile_expr(subexpr) + "\nadd rax, 1",

Expr::Sub1(subexpr) => compile_expr(subexpr) + "\nsub rax, 1",

}

}

And putting it all together in main:

fn main() -> std::io::Result<()> {

let args: Vec<String> = env::args().collect();

let in_name = &args[1];

let out_name = &args[2];

let mut in_file = File::open(in_name)?;

let mut in_contents = String::new();

in_file.read_to_string(&mut in_contents)?;

let expr = parse_expr(&parse(&in_contents).unwrap());

let result = compile_expr(&expr);

let asm_program = format!("

section .text

global our_code_starts_here

our_code_starts_here:

{}

ret

", result);

let mut out_file = File::create(out_name)?;

out_file.write_all(asm_program.as_bytes())?;

Ok(())

}

Testing our compiler

Then we can write tests like this add.snek:

$ cat test/add.snek

(sub1 (sub1 (add1 73)))

And run our whole compiler end-to-end:

$ make test/add.run

cargo run -- test/add.snek test/add.s

Finished dev [unoptimized + debuginfo] target(s) in 0.02s

Running `target/x86_64-apple-darwin/debug/adder test/add.snek test/add.s`

nasm -f macho64 test/add.s -o runtime/our_code.o

ar rcs runtime/libour_code.a runtime/our_code.o

rustc -L runtime/ runtime/start.rs -o test/add.run

$ cat test/add.s

section .text

global our_code_starts_here

our_code_starts_here:

mov rax, 73

add rax, 1

sub rax, 1

sub rax, 1

ret

$ ./test/add.run

72

Note: make test/add.run may delete test/add.s as an intermediate file.

If so, run make test/add.s before running cat test/add.s.

This is, of course, a very simple language. This tutorial serves mainly to make us use all the pieces of infrastructure that we'll build on throughout the quarter:

- An assembler (

nasm) and a Rust main program (runtime/start.rs) to build binaries - A definition of abstract syntax (

enum Expr) - A parser for text (

parsefrom thesexpcrate) and a parser for our abstract syntax (parse_expr) - A code generator (

compile_expr) that generates assembly fromExprs

Most of our future assignments will be built from just these pieces, plus extra infrastructure added as we need it.

Your TODOs

- Do the whole tutorial above, creating the project repository as you go. Write several tests to convince yourself that things are working as expected.

- Then, add support for

negateas described in the beginning, and write several tests fornegateas well. - In your terminal, demonstrate your compiler working on at least 5 different

examples by using

caton a sourcesnekfile, then showingmakerunning, usingcaton the resulting.sfile, and then running the resulting binary. Copy this interactino into a file calledtranscript.txt

Hand in your entire repository to the 01-adder assignment on github.

That is you only need to commit and push your cloned assignment

There is no automated grading for this assignment; we want you to practice gaining your own confidence that your solution works (and demonstrating that to us).

Week 2: Boa, Due Tuesday, April 12 (Open Collaboration)

In this assignment you'll implement a compiler for a small language called Boa, that has let bindings and binary operators. The key difference between this language and what we implemented in class is that there can be multiple variables defined within a single let. There are a number of other details where we fill in exact behavior.

Setup

Get the assignment at https://classroom.github.com/a/P5qpkKKh. This will make a private-to-you copy of the repository hosted within the course's organization.

The Boa Language

In each of the next several assignments, we'll introduce a language that we'll implement. We'll start small, and build up features incrementally. We're starting with Boa, which has just a few features – defining variables, and primitive operations on numbers.

There are a few pieces that go into defining a language for us to compile:

- A description of the concrete syntax – the text the programmer writes.

- A description of the abstract syntax – how to express what the programmer wrote in a data structure our compiler uses.

- A description of the behavior of the abstract syntax, so our compiler knows what the code it generates should do.

Concrete Syntax

The concrete syntax of Boa is:

<expr> :=

| <number>

| <identifier>

| (let (<binding> +) <expr>)

| (add1 <expr>)

| (sub1 <expr>)

| (+ <expr> <expr>)

| (- <expr> <expr>)

| (* <expr> <expr>)

<binding> := (<identifier> <expr>)

Here, a let expression can have one or more bindings (that's what the

<binding> + notation means). <number>s are in base 10 and must be

representable as an i32. <identifier>s are names and should be limited to

alphanumeric characters, hyphens, and underscores, and should start with a

letter. (The sexp library handles more than this, but this keeps things

nicely readable.)

Abstract Syntax

The abstract syntax of Boa is a Rust enum. Note that this

representation is different from what we used in

Adder.

enum Op1 {

Add1,

Sub1

}

enum Op2 {

Plus,

Minus,

Times

}

enum Expr {

Number(i32),

Id(String),

Let(Vec<(String, Expr)>, Box<Expr>),

UnOp(Op1, Box<Expr>),

BinOp(Op2, Box<Expr>, Box<Expr>)

}

Semantics

A "semantics" describes the languages' behavior without giving all of the assembly code for each instruction.

A Boa program always evaluates to a single integer.

- Numbers evaluate to

themselves (so a program just consisting of

Number(5)should evaluate to the integer5). - Unary operator expressions perform addition or subtraction by one on

their argument. If the result wouldn't fit in an

i32, the program can have any behavior (e.g. overflow withadd1or underflow withsub1). - Binary operator expressions evaluate their arguments and combine them

based on the operator. If the result wouldn't fit in an

i32, the program can have any behavior (e.g. overflow or underflow with+/-/*). - Let bindings should use lexical scoping: evaluate all the binding expressions to values one by one, and after each, store a mapping from the given name to the corresponding value in both (a) the rest of the bindings, and (b) the body of the let expression. Identifiers evaluate to whatever their current stored value is.

There are several examples further down to make this concrete.

The compiler should stop and report an error if:

- There is a binding list containing two or more bindings with the same name. The error should contain the string

"Duplicate binding" - An identifier is unbound (there is no surrounding let binding for it) The error should contain the string

"Unbound variable identifier {identifier}"(where the actual name of the variable is substituted for{identifier})

If there are multiple errors, the compiler can report any non-empty subset of them.

Here are some examples of Boa programs. In the "Abstract Syntax" parts, we

assume that the program has appropriate use statements to avoid the Expr::

and other prefixes in order to write the examples compactly.

Example 1

Concrete Syntax

5

Abstract Syntax

Number(5)

Result

5

Example 2

Concrete Syntax

(sub1 (add1 (sub1 5)))

Abstract Syntax

UnOp(Sub1, Box::new(UnOp(Add1, Box::new(UnOp(Sub1, Box::new(Number(5)))))))

Result

4

Example 3

Concrete Syntax

(let ((x 5)) (add1 x))

Abstract Syntax

Let(vec![("x".to_string(), Number(5))],

Box::new(UnOp(Add1, Box::new(Id("x".to_string())))))

Result

6

More examples

(sub1 5)

# as an expr

UnOp(Sub1, Box::new(Number(5)))

# evaluates to

4

(let ((x 10) (y 7)) (* (- x y) 2))

# as an expr

Let(vec![("x".to_string(), Number(10)), ("y".to_string(), Number(7))],

Box::new(BinOp(Times, Box::new(BinOp(Minus, Box::new(Id("x".to_string())),

Box::new(Id("y".to_string())))), Box::new(Number(2)))));

# evaluates to

6

Implementing a Compiler for Boa

You've been given a starter codebase that has several pieces of infrastructure:

- A main program (

main.rs) that uses the parser and compiler to produce assembly code from an input Boa text file. You don't need to edit this much except to change howresultis filled in. - A

Makefileand a runner (runtime/start.rs) that are basically the same as the ones from Week 1 - Extra infrastructure for running unit tests in

tests/infra(you don't need to edittests/infra, but you may enjoy reading it). - A test file,

tests/all_tests.rs, which describes the expected output and expected errors for.snekfiles in thetests/directory. You will add your own tests by filling in new entries insuccess_tests!andfailure_tests!; we've provided a few examples. Each entry corresponds to a single.snekfile. You will add a lot more – focus on making these interesting and thorough!

Writing the Parser

The parser will be given a S-expression representing the whole program, and

must build a AST of the Expr data type from this S-expression.

An S-expression in Rust is of the following type:

pub enum Sexp {

Atom(Atom),

List(Vec<Sexp>),

}

pub enum Atom {

S(String),

I(i64),

F(f64),

}

Thus, an example S-expression that could be parsed into a program would be as follows

List(vec![Atom("let"), List(vec![List(vec![Atom("x"), Atom("5")])]), Atom("x")])

which corresponds to

(let ((x 5)) x)

in Boa or

{

let x = 5;

x

}

in Rust.

This should then parse to the AST

Let(vec![("x".to_string(), Number(5))], Id("x".to_string()))

which can then be compiled.

Since most S-expressions are lists, you will need to check the first element of

the list to see if the operation to perform is a let, add1, *, and so on.

If an S-expression is of an invalid form, (i.e. a let with no body, a +

with three arguments, etc.) panic with a message containing the string

"Invalid".

You can assume that an id is a valid string of form [a-zA-z][a-zA-Z0-9]*.

You will, however, have to check that the string does not match any of

the language's reserved words, such as let, add1, and sub1.

The parsing should be implemented in

fn parse_expr(s: &Sexp) -> Expr {

todo!("parse_expr");

}

You can also implement a helper function parse_bind

fn parse_bind(s: &Sexp) -> (String, Expr) {

todo!("parse_bind");

}

which may be helpful for handling let expressions.

Writing the Compiler

The primary task of writing the Boa compiler is simple to state: take an

instance of the Expr type and turn it into a list of assembly

instructions. Start by defining a function that compiles an expression into a

list of instructions:

fn compile_to_instrs(e: &Expr) -> Vec<Instr> {

todo!("compile_to_instrs");

}

which takes an Expr value (abstract syntax) and turns it into a list of

assembly instructions, represented by the Instr type. Use only the

provided instruction types for this assignment; we will be gradually expanding

this as the quarter progresses.

Note: For variable bindings, we used im::HashMap<String, i32> from the

im crate.

We use the immutable HashMap here to make nested scopes easier because we

found it annoying to remember to pop variables when you leave a scope.

You're welcome to use any reasonable strategy here.

The other functions you need to implement are:

fn instr_to_str(i: &Instr) -> String {

todo!("instr_to_str");

}

fn val_to_str(v: &Val) -> String {

todo!("val_to_str");

}

They render individual instances of the Instr type and Val type into a string

representation of the instruction. This second step is straightforward, but

forces you to understand the syntax of the assembly code you are generating.

Most of the compiler concepts happen in the first step, that of generating

assembly instructions from abstract syntax. Feel free to ask or refer to

on-line resources if you want more information about a particular assembly

instruction!

After that, put everything together with a compile function that compiles an

expression into assembly represented by a string.

fn compile(e: &Expr) -> String {

todo!("compile");

}

Assembly instructions

The Instr type is defined in the starter code. The assembly instructions that

you will have to become familiar with for this assignment are:

-

IMov(Val, Val)— Copies the right operand (source) into the left operand (destination). The source can be an immediate argument, a register or a memory location, whereas the destination can be a register or a memory location.Examples:

mov rax, rbx mov [rax], 4 -

IAdd(Val, Val)— Add the two operands, storing the result in its first operand.Example:

add rax, 10 -

ISub(Val, Val)— Store in the value of its first operand the result of subtracting the value of its second operand from the value of its first operand.Example:

sub rax, 216 -

IMul(Val, Val)— Multiply the left argument by the right argument, and store in the left argument (typically the left argument israxfor us)Example:

imul rax, 4

Running

Put your test .snek files in the test/ directory. Run make test/<file>.s

to compile your snek file into assembly.

$ make test/add1.s

cargo run -- test/add1.snek test/add1.s

$ cat test/add1.s

section .text

global our_code_starts_here

our_code_starts_here:

mov rax, 131

ret

To actually evaluate your assembly code, we need to link it with runtime.rs to

create an executable. This is covered in the Makefile.

$ make test/add1.run

nasm -f elf64 test/add1.s -o runtime/our_code.o

ar rcs runtime/libour_code.a runtime/our_code.o

rustc -L runtime/ runtime/start.rs -o test/add1.run

Finally you can run the file by executing to see the evaluated output:

$ ./test/add1.run

131

Ignoring or Changing the Starter Code

You can change a lot of what we describe above; it's a (relatively strong) suggestion, not a requirement. You might have different ideas for how to organize your code or represent things. That's a good thing! What we've shown in class and this writeup is far from the only way to implement a compiler.

To ease the burden of grading, we ask that you keep the following in mind: we

will grade your submission (in part) by copying our own tests/ directory in

place of the one you submit and running cargo test -- --test-threads 1. This relies on the

interface provided by the Makefile of producing .s files and .run files.

It doesn't rely on any of the data definitions or function signatures in

src/main.rs. So with that constraint in mind, feel free to make new

architectural decisions yourself.

Strategies, and FAQ

Working Incrementally

If you are struggling to get started, here are a few ideas:

- Try to tackle features one at a time. For example, you might completely ignore let expressions at first, and just work on addition and numbers to start. Then you can work into subtraction, multiplication, and so on.

- Some features can be broken down further. For example, the let expressions in this assignment differ from the ones in class by having multiple variables allowed per let expression. However, you can first implement let for just a single variable (which will look quite a bit like what we did in class!) and then extend it for multiple bindings.

- Use git! Whenver you're in a working state with some working tests, make a commit and leave a message for yourself. That way you can get back to a good working state later if you end up stuck.

FAQ

What should (let ((x 5) (z x)) z) produce?

From the PA writeup: “Let bindings should evaluate all the binding expressions to values one by one, and after each, store a mapping from the given name to the corresponding value in both (a) the rest of the bindings, and (b) the body of the let expression. Identifiers evaluate to whatever their current stored value is.”

Do Boa programs always have the extra parentheses around the bindings?

In Boa, there's always the extra set of parens around the list.

Can we write additional helper functions?

Yes.

Do we care about the text return from panic?

Absolutely. Any time you write software you should strive to write thoughtful error messages. They will help you while debugging, you when you make a mistake coming back to your code later, and anyone else who uses your code.

As for the autograder, we expect you to catch parsing and compilation errors. For parsing errors you should panic! an error message containing the word Invalid. For compilation errors, you should catch duplicate binding and unbound variable identifier errors and panic! Duplicate binding and Unbound variable identifier {identifier} respectively. We've also added these instructions to the PA writeup.

How should we check that identifiers are valid according to the description in the writeup?

From the PA writeup: “You can assume that an id is a valid string of form [a-zA-z][a-zA-Z0-9]*. You will, however, ...”

Assume means that we're not expecting you to check this for the purposes of the assignment (though you're welcome to if you like).

What should the program () compile to?

Is () a Boa program (does it match the grammar)? What should the compiler do with a program that doesn't match the grammar?

What's the best way to test? What is test case

A few suggestions:

- First, make sure to test all the different expressions as a baseline

- Then, look at the grammar. There are lots of places where

<expr>appears. In each of those positions, any other expression could appear. Soletcan appear inside+and vice versa, and in the binding position of let, and so on. Make sure you've tested enough nested expressions to be confident that each expression works no matter the context - Names of variables are interesting – the names can appear in different places and have different meanings depending on the structure of let. Make sure that you've tried different combinations of

letnaming and uses of variables.

My tests non-deterministically fail sometimes

You are probably running cargo test instead of cargo test -- --test-threads 1. The testing infrastructure interacts with the file system in a way that can cause data races when

tests run in parallel. Limiting the number of threads to 1 will probably fix the issue.

Grading

A lot of the credit you get will be based on us running autograded tests on your submission. You'll be able to see the result of some of these on while the assignment is out, but we may have more that we don't show results for until after assignments are all submitted.

We'll combine that with some amount of manual grading involving looking at your

testing and implementation strategy. You should have your own thorough test

suite (it's not unreasonable to write many dozens of tests; you probably don't

need hundreds), and you need to have recognizably implemented a compiler. For

example, you could try to calculate the answer for these programs and

generate a single mov instruction: don't do that, it doesn't demonstrate the

learning outcomes we care about.

Any credit you lose will come with instructions for fixing similar mistakes on future assignments.

Extension: Assembling Directly from Rust

Boa is set up as a traditional ahead-of-time compiler that generates an assembly file and (with some help from system linkers) eventually a binary.

Many language systems work this way (it's what rustc, gcc, and clang do,

for instance), but many modern systems also generate machine code directly from

the process that runs the compiler, and the compiler's execution can be

interleaved with users' program execution. This isn't a new

idea,

Smalltalk and LISP are early examples of languages built atop runtime code

generation. JavaScript engines in web browsers are likely the most ubiquitous

use case.

Rust, with detailed control over memory and a broad package system, provides a pretty good environment for doing this kind of runtime code generation. In these assignment extensions, we'll explore how to build on our compiler to create a system in this style, and showcase some unique features it enables.

These extensions are not required, nor are they graded. However, we'd be delighted to hear about what you're trying for them in office hours, see what you've done, and give feedback on them. The staff have done a little bit of work to proof-of-concept some of this, but you'll be largely on your own and things aren't guaranteed to be obviously possible.

The primary tool we think is particularly useful here is

dynasm.

(You might also find assembler useful,

but it hasn't been updated in a while and dynasm was what we found easiest

to use). The basic idea is that dynasm provides Rust macros that build up a

vector of bytes representing machine instructions. References to these vectors

can be cast using

mem::transmute Rust functions,

which can be called from our code.

As a first step towards building a dynamic system, you should try building a

REPL, or read-eval-print loop, re-using as much as possible from the Boa

compiler. That is, you should be able to support interactions like the below,

where one new syntactic form, define, has been added.

$ cargo run -- -i # rather than input/output files, specify -i for interactive

> (let ((x 5)) (+ x 10))

15

> (define x 47)

> x

47

> (+ x 4)

51

A sample of how to get started with this is at adder-dyn. We won't give any hints or support beyond this except:

- The REPL is probably best implemented as a

whileloop inmain - You'll need to figure out how to store the

defined variables on the heap somewhere and refer to them from the generated code

Happy hacking!

Week 3: Cobra, Due Friday, April 26 (Open Collaboration)

In this assignment you'll implement a compiler for a small language called Cobra, which extends Boa with booleans, conditionals, variable assignment, and loops.

Setup

Get the assignment at https://classroom.github.com/a/tnyP6D51 This will make a private-to-you copy of the repository hosted within the course's organization.

The Cobra Language

Concrete Syntax

The concrete syntax of Cobra is:

<expr> :=

| <number>

| true

| false

| input

| <identifier>

| (let (<binding>+) <expr>)

| (<op1> <expr>)

| (<op2> <expr> <expr>)

| (set! <name> <expr>)

| (if <expr> <expr> <expr>)

| (block <expr>+)

| (loop <expr>)

| (break <expr>)

<op1> := add1 | sub1 | isnum | isbool

<op2> := + | - | * | < | > | >= | <= | =

<binding> := (<identifier> <expr>)

true and false are literals. Names used in let cannot have the name of

other keywords or operators (like true or false or let or block).

Numbers should be representable as a signed 63-bit number (e.g. from

-4611686018427387904 to 4611686018427387903).

Abstract Syntax

You can choose the abstract syntax you use for Cobra. We recommend something like this:

enum Op1 { Add1, Sub1, IsNum, IsBool, }

enum Op2 { Plus, Minus, Times, Equal, Greater, GreaterEqual, Less, LessEqual, }

enum Expr {

Number(i32),

Boolean(bool),

Id(String),

Let(Vec<(String, Expr)>, Box<Expr>),

UnOp(Op1, Box<Expr>),

BinOp(Op2, Box<Expr>, Box<Expr>),

Input,

If(Box<Expr>, Box<Expr>, Box<Expr>),

Loop(Box<Expr>),

Break(Box<Expr>),

Set(String, Box<Expr>),

Block(Vec<Expr>),

}

Semantics

A "semantics" describes the languages' behavior without giving all of the assembly code for each instruction.

A Cobra program always evaluates to a single integer, a single boolean, or ends

with an error. When ending with an error, it should print a message to

standard error (eprintln! in Rust works well for this) and a non-zero exit

code (std::process::exit(N) for nonzero N in Rust works well for this).

inputexpressions evaluate to the first command-line argument given to the program. The command-line argument can be any Cobra value: a valid number ortrueorfalse. If no command-line argument is provided, the value ofinputisfalse. When running the program the argument should be provided astrue,false, or a base-10 number value.- All Boa programs evaluate in the same way as before, with one

exception: if numeric operations would overflow a 63-bit integer, the program

should end in error, reporting

"overflow"as a part of the error. - If the operators other than

=are used on booleans, an error should be raised from the running program, and the error should contain "invalid argument". Note that this is not a compilation error, nor can it be in all cases due toinput's type being unknown until the program starts. - The relative comparison operators like

<and>evaluate their arguments and then evaluate totrueorfalsebased on the comparison result. - The equality operator

=evaluates its arguments and compares them for equality. It should raise an error if they are not both numbers or not both booleans, and the error should contain "invalid argument" if the types differ. - Boolean expressions (

trueandfalse) evaluate to themselves ifexpressions evaluate their first expression (the condition) first. If it'sfalse, they evaluate to the third expression (the “else” block), and to the second expression if any other value (including numbers).blockexpressions evaluate the subexpressions in order, and evaluate to the result of the last expression. Blocks are mainly useful for writing sequences that includeset!, especially in the body of a loop.set!expressions evaluate the expression to a value, and change the value stored in the given variable to that value (e.g. variable assignment). Theset!expression itself evaluates to the new value. If there is no surrounding let binding for the variable the identifier is considered unbound and an error should be reported.loopandbreakexpressions work together. Loops evaluate their subexpression in an infinite loop untilbreakis used. Break expressions evaluate their subexpression and the resulting value becomes the result of the entire loop. Typically the body of a loop is written withblockto get a sequence of expressions in the loop body.isnumandisboolare primitive operations that test their argument's type;isnum(v)evaluates totrueifvis a number andfalseotherwise, andisbool(v)evaluates totrueifvis a boolean andfalseotherwise.

There are several examples further down to make this concrete.

The compiler should stop and report an error if:

- There is a binding list containing two or more bindings with the same name.

The error should contain the string

"Duplicate binding" - An identifier is unbound (there is no surrounding let binding for it) The

error should contain the string

"Unbound variable identifier {identifier}"(where the actual name of the variable is substituted for{identifier}) - A

breakappears outside of any surroundingloop. The error should contain "break" - An invalid identifier is used (it matches one of the keywords). The error should contain "keyword"

If there are multiple errors, the compiler can report any non-empty subset of them.

Here are some examples of Cobra programs.

Example 1

Concrete Syntax

(let ((x 5))

(block (set! x (+ x 1))))

Abstract Syntax Based on Our Design

Let(vec![("x".to_string(), Number(5))],

Box::new(Block(

vec![Set("x".to_string(),

Box::new(BinOp(Plus, Id("x".to_string()),

Number(1)))])))

Result

6

Example 2

(let ((a 2) (b 3) (c 0) (i 0) (j 0))

(loop

(if (< i a)

(block

(set! j 0)

(loop

(if (< j b)

(block (set! c (sub1 c)) (set! j (add1 j)))

(break c)

)

)

(set! i (add1 i))

)

(break c)

)

)

)

Result

-6

Example 3

This program calculates the factorial of the input.

(let

((i 1) (acc 1))

(loop

(if (> i input)

(break acc)

(block

(set! acc (* acc i))

(set! i (+ i 1))

)

)

)

)

Implementing a Compiler for Cobra

The starter code makes a few infrastructural suggestions. You can change these as you feel is appropriate in order to meet the specification.

Reporting Dynamic Errors

We've provided some infrastructure for reporting errors via the

snek_error

function in start.rs. This is a function that can be called from the

generated program to report an error. for now we have it take an error code as

an argument; you might find the error code useful for deciding which error

message to print. This is also listed as an extern in the generated

assembly startup

code.

Calculating Input

We've provided a

parse_input

stub for you to fill in to turn the command-line argument to start.rs into a

value suitable for passing to our_code_starts_here. As a reminder/reference,

the first argument in the x86_64 calling convention is stored in rdi. This

means that, for example, moving rdi into rax is a good way to get “the

answer” for the expression input.

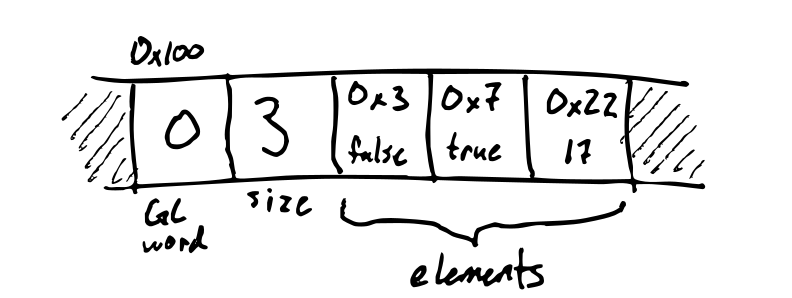

Representations

In class we chose representations with 0 as a tag bit for numbers and 1 for

booleans with the values 3 for true and 1 for false. You do not

have to use those, though it's a great starting point and we recommend it. Your

only obligation is to match the behavior described in the specification, and if

you prefer a different way to distinguish types, you can use it. (Keep in mind,

though, that you still must generate assembly programs that have the specified

behavior!)

Running and Testing

The test format changed slightly to require a test name along with a test

file name. This is to support using the same test file with different

command line arguments. You can see several of these in the sample

tests.

Note that providing input is optional. These also illustrate how to check for

errors.

If you want to try out a single file from the command line (and perhaps from a

debugger like gdb or lldb), you can still run them directly from the

command line with:

$ make tests/some-file.run

$ ./tests/some-file.run 1234

where the 1234 could be any valid command-line argument.

As a note on running all the tests, the best option is to use make test,

which ensures that cargo build is run first and independently before cargo test.

Grading

As with the previous assignment, a lot of the credit you get will be based on us running autograded tests on your submission. You'll be able to see the result of some of these on while the assignment is out, but we may have more that we don't show results for until after assignments are all submitted.

We'll combine that with some amount of manual grading involving looking at your

testing and implementation strategy. You should have your own thorough test

suite (it's not unreasonable to write many dozens of tests; you probably don't

need hundreds), and you need to have recognizably implemented a compiler. For

example, you could try to calculate the answer for these programs and

generate a single mov instruction: don't do that, it doesn't demonstrate the

learning outcomes we care about.

Any credit you lose will come with instructions for fixing similar mistakes on future assignments.

FAQ

Some of my tests fail with a No such file or directory error

The initial version of the starter code contained an error in the testing infrastructure. If you cloned before we fixed it, you'll have to update the code. You can update the code by running:

git remote add upstream https://github.com/ucsd-cse231/03-cobra

git pull upstream main --allow-unrelated-histories

This will merge all commits from the template into your repository. Alternatively, you can also

clone https://github.com/ucsd-cse231/03-cobra and manually replace your tests/

directory.

Extension: Using Dynamic Information to Optimize

A compiler for Cobra needs to generate extra instructions to check for booleans

being used in binary operators. We could use a static type-checker to avoid

these, but at the same time, the language is fundamentally dynamic because the

compiler cannot know the type of input until the program starts running

(which happens after it is compiled). This is the general problem that systems

for languages like JavaScript and Python face; it will get worse when we

introduce functions in the next assignment.

However, if our compiler can make use of some dynamic information, we can do better.

There are two instructive optimizations we can make with dynamic information, one for standalone programs and one at the REPL.

Eval

Add a new command-line option, -e, for “eval”, that evaluates a program

directly after compiling it with knowledge of the command-line argument. The

usage should be:

cargo run -- -e file.snek <arg>

That is, you provide both the file and the command-line argument. When called

this way, the compiler should skip any instructions used for checking for

errors related to input. For example, for this program, if a number is given

as the argument, we could omit all of the tag checking related to the input

argument (and since 1 is a literal, we could recover essentially the same

compilation as for Boa).

(+ 1 input)

For this program, if input is a boolean, we should preserve that the program

throws an error as usual.

Known Variables at the REPL

Similarly, after a define statement evaluates at the REPL, we can know that

variable's tag and use that information to compile future entries. For example,

in this REPL sequence, we define a numeric variable and use it in an operator

later. We could avoid tag checks for x in the later use:

> (define x (+ 3 4))

> (+ x 10)

Note a pitfall here – if you allow set! on defined variables, their types

could change mid-expression, so there are some restrictions on when this should

be applied. Make sure to test this case.

Happy hacking!

Discussion

It's worth re-emphasizing that a static type-checker could recover a lot of

this performance, and for Cobra it's pretty straightforward to implement a

type-checker (especially for expressions that don't involve input).

However, we'll soon introduce functions, which add a whole new layer of potential dynamism and unknown types (because of function arguments), so the same principles behind these simple cases become much more pronounced. And a language with functions and a static type system is quite different from a language with functions and no static type system.

Week 5: Diamondback, Due Friday, May 3 (Closed Collaboration)

In this assignment you'll implement a compiler for a language called Diamondback, which has top-level function definitions.

This assignment is closed to collaboration.

Setup

Get the assignment at https://classroom.github.com/a/20qeISvT This will make a private-to-you copy of the repository hosted within the course's organization.

The Diamondback Language

Concrete Syntax

The concrete syntax of Diamondback has a significant change from past

languages. It distinguishes top-level declarations from expressions. The new

parts are function definitions, function calls, and the print unary operator.

<prog> := <defn>* <expr> (new!)

<defn> := (fun (<name> <name>*) <expr>) (new!)

<expr> :=

| <number>

| true

| false

| input

| <identifier>

| (let (<binding>+) <expr>)

| (<op1> <expr>)

| (<op2> <expr> <expr>)

| (set! <name> <expr>)

| (if <expr> <expr> <expr>)

| (block <expr>+)

| (loop <expr>)

| (break <expr>)

| (<name> <expr>*) (new!)

<op1> := add1 | sub1 | isnum | isbool | print (new!)

<op2> := + | - | * | < | > | >= | <= | =

<binding> := (<identifier> <expr>)

Abstract Syntax

You can choose the abstract syntax you use for Diamondback.

Semantics

A Diamondback program always evaluates to a single integer, a single boolean,

or ends with an error. When ending with an error, it should print a message to

standard error (eprintln! in Rust works well for this) and a non-zero exit

code (std::process::exit(N) for nonzero N in Rust works well for this).

A Diamondback program starts by evaluating the <expr> at the end of the

<prog>. The new expressions have the following semantics:

(<name> <expr>*)is a function call. It first evaluates the expressions to values. Then it evaluates the body of the corresponding function definition (the one with the given<name>) with the values bound to each of the parameter names in that definition.(print <expr>)evaluates the expression to a value and prints it to standard output followed by a\ncharacter. Theprintexpression itself should evaluate to the given value.

There are several examples further down to make this concrete.

The compiler should stop and report an error if:

- There is a call to a function name that doesn't exist

- Multiple functions are defined with the same name

- A function's parameter list has a duplicate name

- There is a call to a function with the wrong number of arguments

inputis used in a function definition (rather than in the expression at the end). It's worth thinking of that final expression as themainfunction or method

If there are multiple errors, the compiler can report any non-empty subset of them.

Here are some examples of Diamondback programs.

Example 1

(fun (fact n)

(let

((i 1) (acc 1))

(loop

(if (> i n)

(break acc)

(block

(set! acc (* acc i))

(set! i (+ i 1))

)

)

)

)

)

(fact input)

Example 2

(fun (isodd n)

(if (< n 0)

(isodd (- 0 n))

(if (= n 0)

false

(iseven (sub1 n))

)

)

)

(fun (iseven n)

(if (= n 0)

true

(isodd (sub1 n))

)

)

(block

(print input)

(print (iseven input))

)

Proper Tail Calls

Implement safe-for-space tail calls for Diamondback. Test with deeply-nested recursion. To make sure you've tested proper tail calls and not just tail recursion, test deeply-nested mutual recursion between functions with different numbers of arguments.

Implementing a Compiler for Diamondback

The main new feature in Diamondback is functions. You should choose and implement a calling convention for these. You're welcome to use the “standard” x86_64 sysv as a convention, or use some of what we discussed in class, or choose something else entirely. Remember that when calling runtime functions in Rust, the generated code needs to respect that calling convention.

A compiler for Diamondback does not need guarantee safe-for-space tail calls, but they are allowed.

Running and Testing

Running and testing are as for Cobra, there is no new infrastructure.

Reference interpreter

You may check the behavior of programs using this interpreter.

Grading

As with the previous assignment, a lot of the credit you get will be based on us running autograded tests on your submission. You'll be able to see the result of some of these on while the assignment is out, but we may have more that we don't show results for until after assignments are all submitted.

We'll combine that with some amount of manual grading involving looking at your